The model that connects bosons with GUT (Grand Unified Theory) and all fundamental forces might look like this.

Things like crossing gravitational fields can form material. But there must be some kind of source for those gravitational waves. When force travels in the universe it requires the force carrier, like a boson, that transfers the force. And then there must be some kind of force that the boson carries.

Without force, that touches the boson. It cannot carry force. When a boson travels through the quantum fields those fields are touching it. But if the energy level of those fields is lower than the boson's energy level is. The boson releases energy.

The boson cannot transport energy or force. In some models, the size of the carrier boson determines the force that it carries. In the field model, the boson travels through the quantum field (or Higgs field) and then that field touches it. The size of those bosons or force-carrier particles is different, and when they travel through the universe the quantum field touches them like some kind of stamp or tape.

And the size of that tape determines which of the four interactions the particle transmits. So when boson particle forms in the middle of an atom it's like an energy whirl that is left from the standing wave that forms between the three quarks.

Then that boson or gluon strats travel out from that power field and its size grows. Whenever that boson crosses the quantum field its size turns bigger and it transmits different forces. In theories, four fundamental interactions are the same force. All of those forces are wave movements but they have different wavelengths.

Protons are more complicated than neutrons. And some bosons may be forming between those smaller particles. But also the main particles in the proton are two up, and one down quarks.

Fundamental interactions: (Wikipedia/Fundamental interaction)

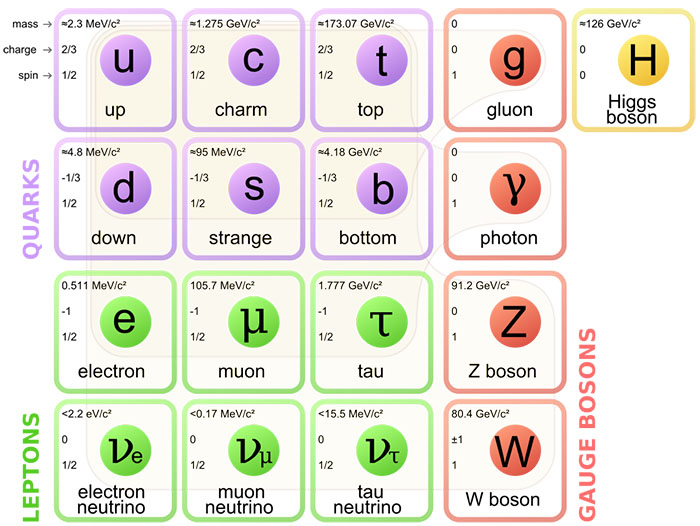

Standard model:

It's time for a new Big Bang Theory.

One galaxy doesn't make the universe. It doesn't brake any theory. When we are talking about redshift measurements, the virtual redshift of the object may be stronger than usual. In those models, there could be a supermassive black hole or zombie galaxy, that is full of black holes in line with the visible galaxy but behind it. And that thing means that the pulling effect of those things might be stronger than it used. And that thing stretches the wave movement.

It's time for the new Big Bang theory. Which can call the modified Big Bang Theory. The biggest weakness of the Big Bang theory is that it cannot explain where the material came from. Material can turn to wave movement and the opposite. But there must be some kind of wave movement that formed the material. Of course, material can come out from singularity but the problem is where that singularity came from.

The Big Bang is over, or at least that theory requires adjustment, but we still can talk about the "Bang" as the beginning of our universe. But the "bang" was not as big and unique as we might want to believe. The problem with the original Big Bang theory is that it cannot explain where the material came from. The material is one version of energy and other ways of saying: the energy is one version of the material. So what is energy?

It's the wave movement or movement where small strings are moving. The Schwinger effect can transform energy or wave movement into particles and particles into energy or wave movement. But the main problem with the original Big Bang theory is that all models that we know require, that there is some kind of energy or material field before the Big Bang.

The oversimplified model of the Big Bang theory is that there was some kind of "singularity" that exploded in a total vacuum. That thing is an excellent model, except that it requires, that this material comes from somewhere. And the good explanation for that is the Phoenix universe. In that model, the fate of the past universe was the big crunch where all material dropped into the giant black hole.

And then when the black hole pulls the final quantum field remnants inside it, the quantum (or Higgs) field that travels in the black hole will turn too weak that it can press the black hole into its form. And then that black hole starts vaporizing. Then those impacting waves formed the new universe. In some version of that theory, multiple black holes were remaining in that ancient universe. Then those black holes started to explode, and impacting waves formed the universe.

But then, where that past universe came from?

There are models where the energy beam dropped from another dimension. But the weakness is that energy must interact with some kind of power field. And that requires the existence of the quantum field before that energy beam came to the third dimension. And that energy field cannot form from nothingness.

Total emptiness cannot form material or anything. So theory called multiverse is one of the simplest ways to explain things like dark energy dark matter, and begin of the universe. In oversimplified models, dark energy is energy that origin is outside of our universe.

The thing. What makes multiverse theory interesting and funny is that maybe we ever cannot prove it. Multiverse theory means that there are other universes. And we are living in one of them. That means many other universes can exist, but they can be different.

Antimatter can form those other universes. There may be antimatter stars, antimatter solar systems, or even antimatter galaxies in our universe, or their age can be different. And, the size of elementary particles in those other universes might be different than in our universe. If another universe is very old. That means its energy level is lower than in our universe. And that thing causes the situation where light from our universe pushes light from another universe away.

So in those models, we cannot see those other universes. In that model, the explanation for dark energy and dark matter is that: they come from another universe.

https://www.forbes.com/sites/startswithabang/2019/03/15/this-is-why-the-multiverse-must-exist/?sh=5a0c613a6d08

https://home.cern/science/physics/standard-model

https://en.wikipedia.org/wiki/Fundamental_interaction

https://en.wikipedia.org/wiki/Grand_Unified_Theory

https://en.wikipedia.org/wiki/Multiverse

https://en.wikipedia.org/wiki/Redshift

https://en.wikipedia.org/wiki/Standard_Model

See also:

Dark matter

Dark energy

https://visionsoftheaiandfuture.blogspot.com/2023/04/gut-grand-unified-theory-theory-and.html